Recommended Blogs

Role of Data Ingestion in Optimizing Data Engineering

Table of Contents

- Data Ingestion in Data Engineering

- Importance of Data Ingestion in Data Engineering Project

- Top 5 Data Ingestion Best Practices

- Conclusion

- How can TestingXperts Help with Data Ingestion?

The amount of data created per year is expected to reach 180 zettabytes by 2025. The adoption of a work-from-home culture and the variety of data captured from critical system sources like stock exchanges, smartphones, healthcare, and power grids are adding more data sources as the storage capacity increases. This increase in data and the rise in fast processing analytics demands are pushing businesses to find new methods to store and process data. This is where data engineering comes in. And the first thing in data engineering that needs attention is the data ingestion strategy. Ingesting high-quality data and importing data for immediate storage or use in a database is necessary for businesses to utilize the full potential of data assets.

Data integration and ingestion in business applications are important in marketing, human resources, and sales. It allows businesses to obtain actionable insights from data, leading to informed decision-making and strategy development. The telecom and IT sectors have also benefited from data ingestion by using it to consolidate data from customer records, third-party systems, and internal databases. It is a key component to efficiently manage, leverage, and analyze data for decision-making and strategic planning across multiple business operations.

Data Ingestion in Data Engineering

Data ingestion in data engineering is the process of retrieving data from multiple sources and transferring it into a designated data warehouse or database. Then, businesses can use that data to perform data analytics and transformation. It involves the following steps:

• Collection of data from multiple sources, which include databases, IoT devices, external data services, and cloud storage.

• Collected data is then imported into a data storage system such as a database, data lake, or data warehouse.

• Data processing, which requires cleaning, transforming, and structuring data for analytics.

• Processed data is stored in a secure, scalable, and efficient manner to facilitate easy access and analysis.

• Lastly continuously managing data to ensure accuracy, security, and consistency over time.

Streamlining the data ingestion process allows businesses to improve data engineering projects’ accuracy, leading to informed decision-making and operational efficiency. The following are two data ingestion types used in data engineering:

Real-time processing:

Businesses ingest data in an online, real-time mode, which gets processed immediately, also known as real-time processing. Data engineers develop pipelines to take action when data gets ingested within seconds.

Batch Processing:

Here, data gets ingested offline for a given time and processed later in batches. Batch processing occurs in specific time intervals daily based on the condition, like the event trigger function. It is a standard data ingestion method.

Importance of Data Ingestion in Data Engineering Project

Data ingestion is essential for improving the value and utility of data in a business, making it a crucial aspect of data engineering. The process involves sending data from multiple sources, such as spreadsheets, JSON data from APIs, CSV files, and Log files, to multiple destinations. It is a core aspect of data pipelines and involves multiple tools for different uses. The destination refers to relational databases, data lakes, or data warehouses. Data ingestion lays the groundwork for various activities surrounding data analysis and management.

• It facilitates the integration of the foundational data layer crucial for analytics and business intelligence tools. This allows businesses to make better decisions depending on the latest ingested data.

• Implementing data ingestion practices allows companies to enhance the quality and consistency of their data. It also facilitates accurate data analysis based on reliable information.

• Businesses like finance or telecommunication rely heavily on real-time data. It makes efficient data ingestion vital, allowing immediate processing and analysis and resulting in timely insights and results.

• Data ingestion allows organizations to scale their infrastructure based on market trends and business needs. It facilitates new data source integration and adjusts to dynamic data volumes.

• Data ingestion ensures businesses adhere to compliance and governance by properly handling and storing data from the outset in accordance with regulatory standards.

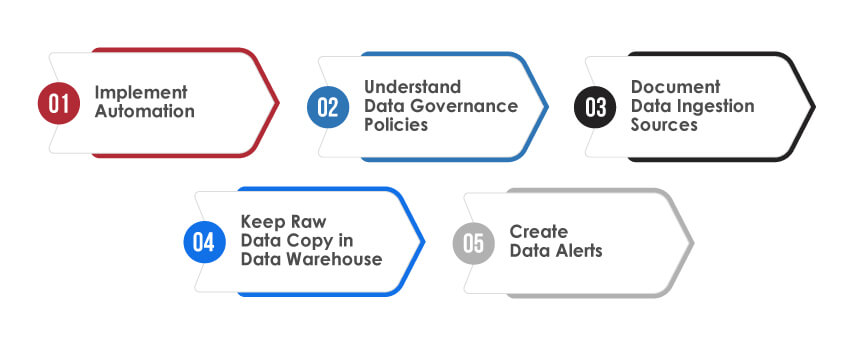

Top 5 Data Ingestion Best Practices

Data engineering project success relies on the accuracy and efficiency of the data ingestion process. Implementing best practices is crucial for optimizing performance, ensuring data quality, and maximizing the value of data assets. As a core aspect of the data processing pipeline, it lays a strong foundation to support data engineering initiatives. Following are some of the key practices that organizations should implement in their data engineering projects:

Implement Automation:

As the complexity of data volume grows, it’s best to automate the processes to reduce manual efforts, increase productivity, and save time. Organizations can improve data management processes, achieve infrastructure consistency, and reduce data processing time with automation. For example, extracting, cleaning, and transferring data from delimited files to SQL servers is an ongoing and repetitive process. Integrating tools to automate the process can optimize the complete ingestion cycle.

Understand Data Governance Policies:

Set standards, policies, roles, and metrics to ensure seamless and effective data utilization to support business objectives. Not adhering to laws such as GDPR and HIPAA may lead to regulatory action. By implementing data governance, businesses can handle the key risks that could lead to poor data handling.

Document Data Ingestion Sources:

Make proper documentation of every data ingestion source, for instance, the tools used with connectors to set up the data flow. Also, note the changes or updates made to make the connector work. This will help keep track of raw information flows and help in situations like data loss, inconsistencies, etc.

Keep Raw Data Copy in Data Warehouse:

Keeping a copy of raw data in a separate warehouse database will act as a backup in case of data processing and modeling failure. Make sure to place strict read-only access and no transformation tool to improve raw data reliability.

Create Data Alerts:

Use tools like Slack to set up alerts for data testing and debugging at the source while fixing issues in data models. It will reduce errors, maintain data flow consistency, and ensure better productivity and reliability of data.

Conclusion

Data ingestion has become a key part of any data engineering project in today’s data-centric business environment. The process involves collecting, importing, processing, and managing data, allowing businesses to use their data assets fully. Real-time and batch processing are the two types that serve the specific needs of data engineering. Effective data ingestion supports informed decision-making, compliance with legal standards, and strategic planning. Also, implementing best practices like data governance, automation, thorough documentation, etc., are crucial to ensure the efficiency and integrity of the data ingestion process.

How can TestingXperts Help with Data Ingestion?

TestingXperts, with its expertise in quality assurance and data engineering, plays a crucial role in optimizing your data ingestion process. Our data testing services ensure that your data is accurately ingested, processed, and ready for analysis. We offer customized QA solutions to handle multiple data types and sources to ensure data integrity and compliance with industry standards. Partnering with TestingXperts gives you the following benefits:

• We have extensive experience in Analytics Testing, Data Warehousing (DW), and Big Data testing engagements and address the unique challenges of DW and Big data analytics testing.

• Our QA experts test the DW applications at all levels, from data sources to the front-end BI applications, and ensure the issues are detected at the early stages of testing.

• Our customized data testing approach ensures data accuracy at various levels of data engineering projects.

We have partnered with QuerySurge to automate your DW verification and ETL process.

• Our testing approach covers extensive test validation and coverage to ensure quality and reliability in the data ingestion process.

• Our team is proficient in DMM (Data Maturity Model) and ensures that all industry standards are adhered to during the validation of ingested data.

To know more, contact our QA experts now.

Discover more