Recommended Blogs

Don’t Trust AI Blindly: A Leader’s Approach to AI Model Validation for Accuracy and Performance

Table of Content

Artificial Intelligence (AI) has already moved from implementation to the operational stage. According to a McKinsey survey, 88% of leaders claim to use AI models in their daily operations. However, using technology at such a level also introduces risks. Currently, enterprises are utilizing AI-based solutions for various purposes, including credit decisions, CRM management, pricing, patient care, and software releases. Therefore, the “close to regulated model” term is no longer acceptable in today’s digitally driven environment. Enterprises need a dedicated AI model validation approach to ensure their solution performs flawlessly and securely under any condition.

Why AI Model Testing and Validation Matters to Business Leaders?

According to the Stanford AI index, the number of AI-related incidents reported in 2024 was 233, a drastic 56.4% increase over 2023. As a leader, you must keep track of such incidents to prevent them from recurring at your organization. You not just own the code, you also own the outcomes. Evaluating AI models is essential for creating a governance-ready solution. It ensures protection against:

- Financial leakage from silent accuracy drifts and decay.

- Regulatory failure when models appear to be biased or cannot be audited.

- Reputational loss when incorrect outputs reach regulators or users.

- Operational fatigue when performance degrades under production constraints.

Research shows that AI assistants can make significant errors at a critical level, which may sound confident. That’s why validating AI accuracy is essential, and businesses must treat model outputs as measurable system behavior.

Defining AI Model Validation: What Every Leader Should Know

AI/ML model validation ensures that your model is checked for accuracy, fairness, operational constraints, and robustness. It helps you answer the following questions:

| Question | It’s About |

| Does it work? | Validating AI accuracy and comparing it with business goals using metrics. |

| Will it continue to perform? | Stability under shift, including stress tests and drift readiness. |

| Is it secure and fair? | Bias and fairness testing AI, along with cybersecurity testing for LLM-style attacks. |

| Can it be governed? | Traceability, documentation, and monitoring aligned with the risk factors involved. |

According to the NIST AI Risk Management Framework, testing and monitoring should be an ongoing task to assess the validity and reliability of your AI model. It ensures your model performs reliably, accurately, and fairly under real-world conditions. AI development and testing involve metrics, scenarios, and stress checks to find weaknesses and fine-tune the model. This level of validation will confirm that the AI model fulfills defined performance, fairness, security, and reliability thresholds. Also, continuous monitoring will ensure your model meets those thresholds after deployment.

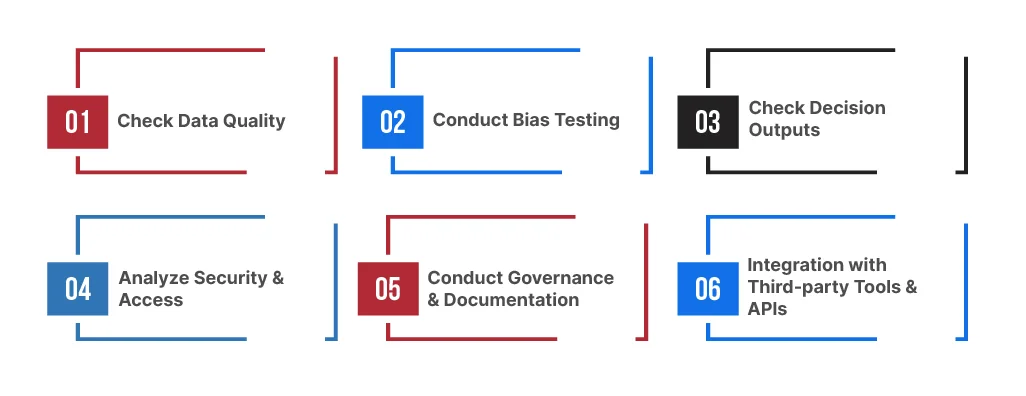

Checklist for Validating AI Accuracy & Performance

Organizations must treat AI model testing as a proactive and regular process to avoid pitfalls. From risk analysis to predictive analytics and fraud detection, validating AI for accuracy and performance is a top priority. Use the checklist below for your AI model validation and performance evaluation:

Check Data Quality:

Ensure the data used to train your AI models is of high quality and well-structured. Identify biased data and audit data lineage and privacy protections to ensure accuracy and integrity before deploying the model.

Conduct Bias Testing:

Run bias and fairness tests to check the output across different demographics. It will also help evaluate model performance in terms of accuracy, recall, and other relevant metrics. Your model should be non-discriminatory and explainable.

Check Decision Outputs:

Check whether the decisions and predictions made by your AI model are accurate and hallucination-free. Use explainability tools like SHAP and LIME to check the decision tree. Conduct continuous monitoring to identify anomalies and flag misaligned trends.

Analyze Security & Access:

Run audits to check access & controls for sensitive data, system customization, or training models. Check encryption and privacy and maintain access logs and user roles. It will prevent unauthorized access and data leaks.

Conduct Governance & Documentation:

Document everything from data sheets and risk assessments to model cards and change logs. Keep your records up-to-date for all AI model versions and record ethical reviews and leadership decisions.

Integration with Third-party Tools & APIs:

When opting for third-party tools, ensure that you thoroughly review them before integrating them into your workflows. Draft a detailed SLA for transparency and include compliance terms in the contract.

Embedding Validation into AI Deployment and Monitoring

AI model testing and validation should be a lifecycle practice, from development to deployment. The process involves turning validation into release gates that are hard to bypass. Maintain model cards and documentation, so stakeholders understand the scope, limits, and known risks. Build automated evaluation pipelines that rerun whenever data, code, prompts, or retrieval sources change, and treat those results as required evidence for promotion. Finally, implement approval workflows aligned with risk tiers, so that low-impact use cases move more quickly, while medium- and high-impact deployments receive more thorough scrutiny and formal sign-off.

Once deployed, you monitor degradation and unintended behavior with the same rigor you apply to uptime. Track data drift and concept drift, and watch for outcome drift when business KPIs shift, even if offline scores look stable. When thresholds are breached, your operating checklist should specify clear actions such as retraining, rollback, tighter guardrails, or human-in-the-loop routing, consistent with NIST guidance to sustain trust through ongoing testing and monitoring.

How can TestingXperts Assist with AI Model Testing?

AI models evolve as data updates, and accuracy can degrade if proper validation and testing parameters are not set. However, enterprises often struggle to scale AI model validation, creating inconsistent standards and unclear ownership. TestingXperts QE for AI services help you convert AI model testing into a repeatable and audit-ready practice. Our solutions deliver:

- 80% improved response consistency

- 80% faster model response times

- 50% reduced manual effort

- 90% easy integration with CI/CD

Want to simplify your AI model validation and evaluation practice? Contact our AI experts to know more about our services.

Conclusion

If you are dealing with AI-based solutions, then you must understand that one cannot manage what you can’t measure. AI models can produce significant errors if not thoroughly tested and validated for their functionality. To answer your question about how to validate AI model accuracy, the answer is to treat AI-enabled testing services as a proactive practice. Define success/failure costs, select the right model accuracy metrics, apply cross-validation methods for AI, and keep assessing AI model performance in production.

FAQs

AI model validation is the process of evaluating an AI/ML model to ensure it performs accurately, fairly, and reliably before and after deployment. It helps confirm the model meets defined business goals and regulatory requirements, and prevents errors, bias, and performance drift in real-world use.

Common challenges in AI model testing include:

-

Data quality issues – Incomplete, biased, or noisy data can lead to inaccurate test results.

-

Lack of labeled data – Limited or expensive labeled datasets make validation difficult.

-

Model bias and fairness – Detecting and mitigating bias across different user groups is complex.

-

Non-deterministic behavior – AI models may produce different outputs for the same input.

Testing uses quantitative measures like accuracy, precision, recall, and F1-score to evaluate how often the model’s predictions are correct and aligned with expected outputs. It also includes checks for bias and performance under varied conditions.

-

Poor data leads to inaccurate predictions

-

Biased data creates unfair models

-

Clean, diverse data improves reliability

High-quality data reduces bias, improves model accuracy, and prevents training on corrupted inputs. It also ensures consistent results during inference and retraining.

Discover more